A single chip such has Intel Xeon Phi has a computational power in excess of 1TFLOPS and features more than a hundred billion transistors. Few people outside the world of semi-conductor engineering appreciate this, but that is a fantastical number: 100,000,000,000. If every transistor was a pixel, you would need a wall 0f 100 x 100 4K TV screen to display them all!

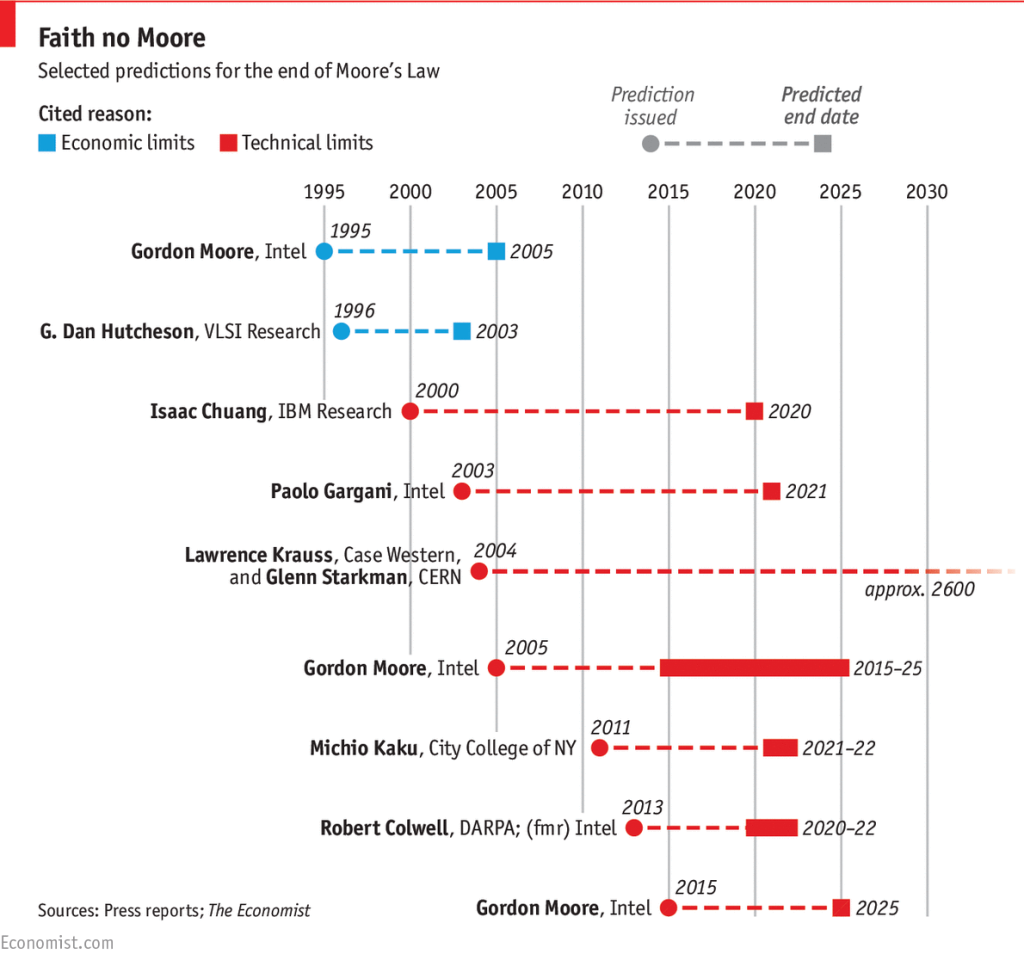

Over the past fifty years, the semiconductor industry has achieved incredible things, in part thanks to planar technology, which allowed to exponentially scale the manufacturing process, following Moore’s law. But it seems that we’re about to hit a wall soon. Let’s give an overview of where we stand, and where do we go from here!While there’s still room to grow in terms of efficiency (see the “Limits on fundamental limits to computation” in Nature journal (paywall), or on Arxiv), hard physical limits will soon curb our enthusiasm when it comes to make smaller devices.

Let’s give an overview of where we stand, and where do we go from here!While there’s still room to grow in terms of efficiency (see the “Limits on fundamental limits to computation” in Nature journal (paywall), or on Arxiv), hard physical limits will soon curb our enthusiasm when it comes to make smaller devices.

EUV lithography, the last frontier?

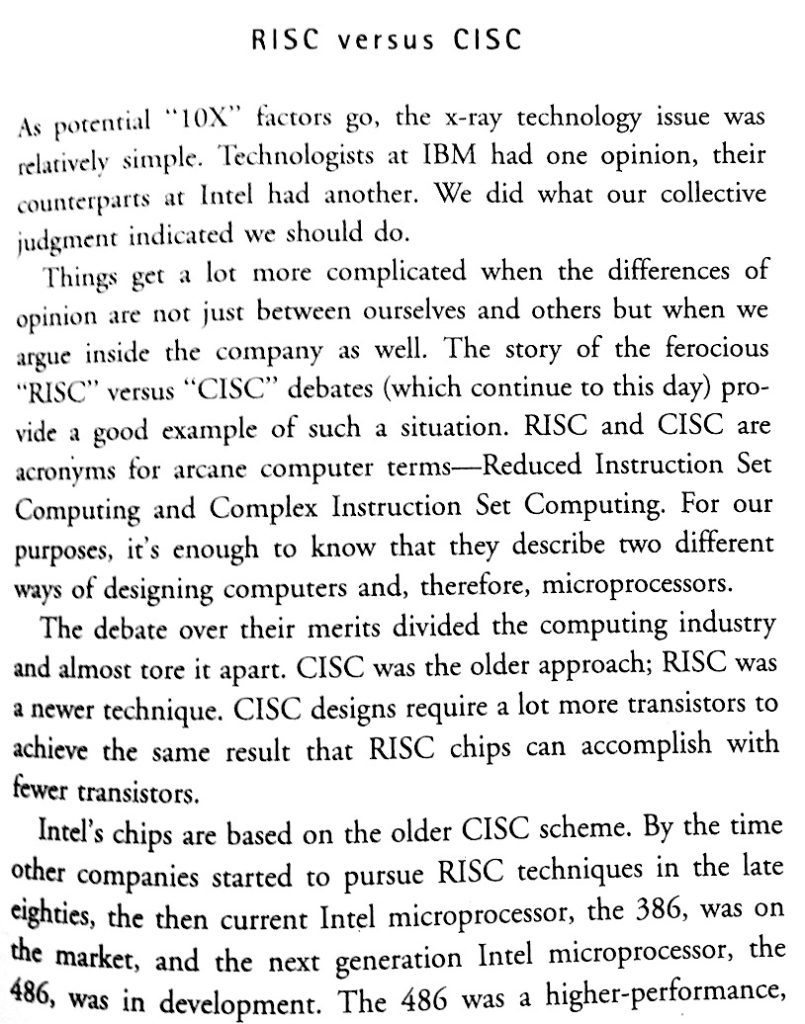

For the past five years, I’ve been working on EUV lithography, the next generation in semi-conductor manufacturing, which is about to replace i193 manufacturing process (there’s still a few hurdles, notably patterned mask inspection and source power, but we’re getting there.) It sure took a while (back in 1994, Andy Grove was already talking was already talking about x-ray lithography in his book “Only the paranoid survives”; he was also prescient and predicted the rise of RISC architecture, a pivot-to-mobile that Intel missed big time, in favor or ARM and Qualcomm)

Excerpt from “Only the paranoid survive” by Andy Grove (1994), talking about EUV (x-ray) lithography

While recording at-wavelength images of EUV photo-mask, I happen to observe the effects of atomic-scale defects, either in the form of defects buried under the multilayer, or because of the intrinsic roughness of the substrate.

Phase and amplitude image of a EUV photomask, with a defect (in red) corresponding to a 1.5nm imperfection in the substrate

EUV lithography will enable lithography down to the 2-nm node or below (if we use double-patterning), but things will start to become more and more impossible — how do you add dopants to a few atom-wide gate?

Inefficiencies – the curse of bandwidth

But even if we can’t go much smaller, there are still many things that we can already do to continue increasing the computational power. Among these is the way we handle data.

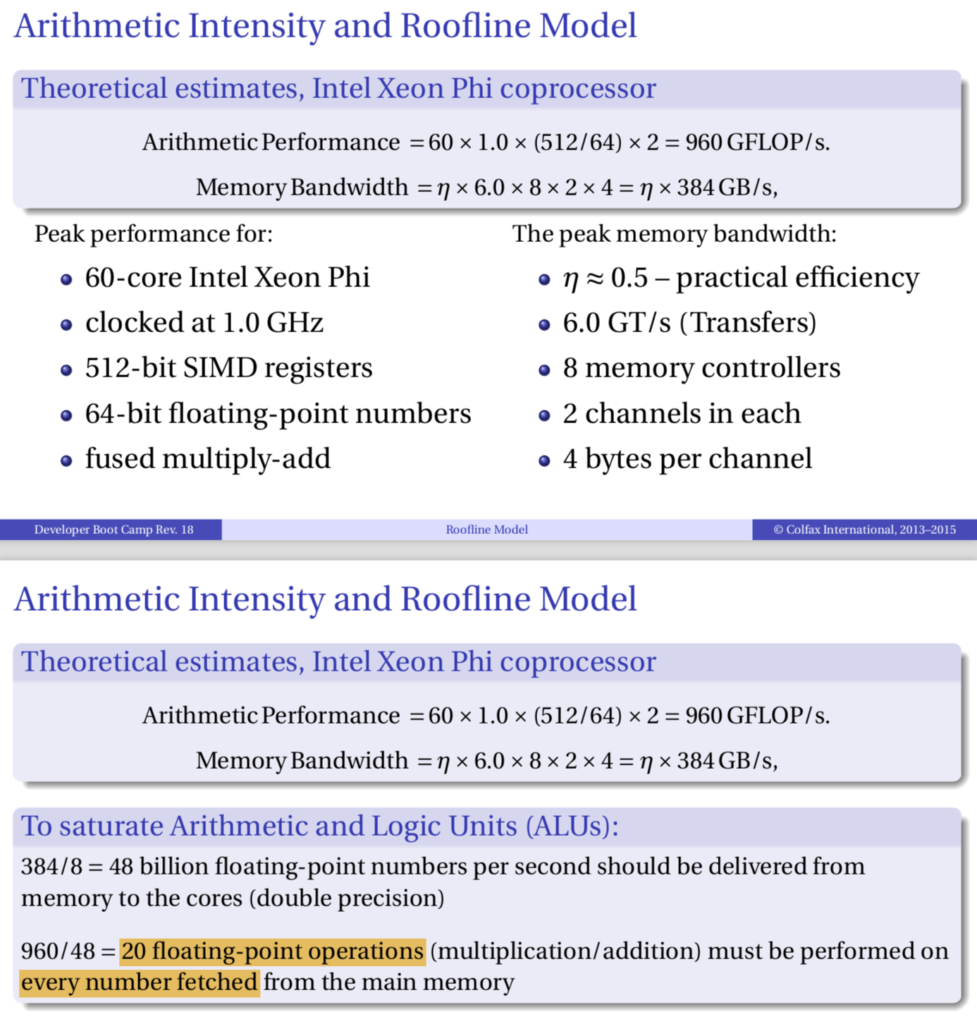

A representative from Colfax, a HPC consulting firm which hosted a workshop at Berkeley Lab to explain how to best use supercomputers such as Cori/NERSC, was making the point that most super computers are not meant to crunch data, because bandwidth today are much too slow compared to the processing power — by a factor 20!

Slide from Colfax showing that processors can be idle 95% of the time (1/20 duty cycle) when they are bandwidth limited

Therefore, increasing the number transistors doesn’t necessarily makes sense for most applications. This respite can be used to optimize bandwidth and other factors.

And bandwidth is all the more important given that recently, two new big applications require a lot of computational power and bandwidth, machine learning and cryptocurrency mining.Silicon photonics was a big thing a few years ago (I remember seeing Mario Paniccia at SPIE Advanced in 2015 or 2016 presenting Intel’s effort; he since has left the company), where the idea is to speed up the bandwidth, albeit not intra-chip. Another big fizzle is Hewlett Packard “The Machine“, based on silicon photonics and memristors which completely failed to deliver, despite the incredible science behind it (and the heroic efforts of Stanley Williams.)Alternative routes

There are a few orthogonal routes that are promising for: neuromorphic computing, quantum computing and the return of analogic computing.

One of the problem of current computer architecture is the way it handles data and process it. A classical example is the comparison between the humain brain and a computer chip: the base efficiency of the former is much higher than that of a computer chip, because of the many connections between the different part of the brain. I was talking with Ivan Schuller from UCSD last October (he wrote a fascinating report for DOE on Neuromorphic computing), but it wasn’t clear where this one going.On a similar idea, there are startups like Koniku that develops chips with actual biologic neuron… That’s kind of crazy, but the good kind:)

Another avenue is Quantum Computing, which is a very hot field right now. The idea is to get rid of the notion of bits and replace them by qubits, which can hold a superposition of information and collapse it to get a solution to a specific problem in a very efficient way (see my previous post… I’ve since organized a few events at the lab around quantum computing, such as the one posted below) In the past few month, companies such as IBM and Microsoft have demonstrated devices with over 49 qubits, considered as the threshold to achieve quantum supremacy. I’m still waiting to see tangible results beyond the PR stunt, but I’m hopeful (I have a bunch of friends working in QC, but frankly I don’t understand much, since can’t keep the pace: that field is moving soooooo fast!)It seems however that quantum computing only supplements classical computing in areas where the latter is not very efficient, but given that QC is (usually) reversible, it cannot possibly replace our current computers.When I visited the Computer History Museum, I was fascinated by the analogic differential calculators, which at the time were outperforming classical computers for a specific set of tasks. At approximately the same period, people were also using spatial light filtering to perform Fourier Transforms, before Cooley and Tukey devised the Fast Fourier Transform algorithm. With machine learning, it seems that there is a renewed interest for analogic computing, with companies such as LightOn, and people formalizing the equivalence between digital and analog computers (see e.g. Amaury Pouly fascinating PhD dissertation on Continuous models of computation: from computability to complexity)Closer to me, people are studying skyrmions — a topological entity that can carry information — which can possibly enable incredible data rates –but I’m unsure whether there will one day be a possibility to alter their state an compute with them directly.

In the past few month, companies such as IBM and Microsoft have demonstrated devices with over 49 qubits, considered as the threshold to achieve quantum supremacy. I’m still waiting to see tangible results beyond the PR stunt, but I’m hopeful (I have a bunch of friends working in QC, but frankly I don’t understand much, since can’t keep the pace: that field is moving soooooo fast!)It seems however that quantum computing only supplements classical computing in areas where the latter is not very efficient, but given that QC is (usually) reversible, it cannot possibly replace our current computers.When I visited the Computer History Museum, I was fascinated by the analogic differential calculators, which at the time were outperforming classical computers for a specific set of tasks. At approximately the same period, people were also using spatial light filtering to perform Fourier Transforms, before Cooley and Tukey devised the Fast Fourier Transform algorithm. With machine learning, it seems that there is a renewed interest for analogic computing, with companies such as LightOn, and people formalizing the equivalence between digital and analog computers (see e.g. Amaury Pouly fascinating PhD dissertation on Continuous models of computation: from computability to complexity)Closer to me, people are studying skyrmions — a topological entity that can carry information — which can possibly enable incredible data rates –but I’m unsure whether there will one day be a possibility to alter their state an compute with them directly.

More than Moore

Oh boy, I really hate this term… I won’t go any further!