Everything that can be measured can be managed – Peter Drucker

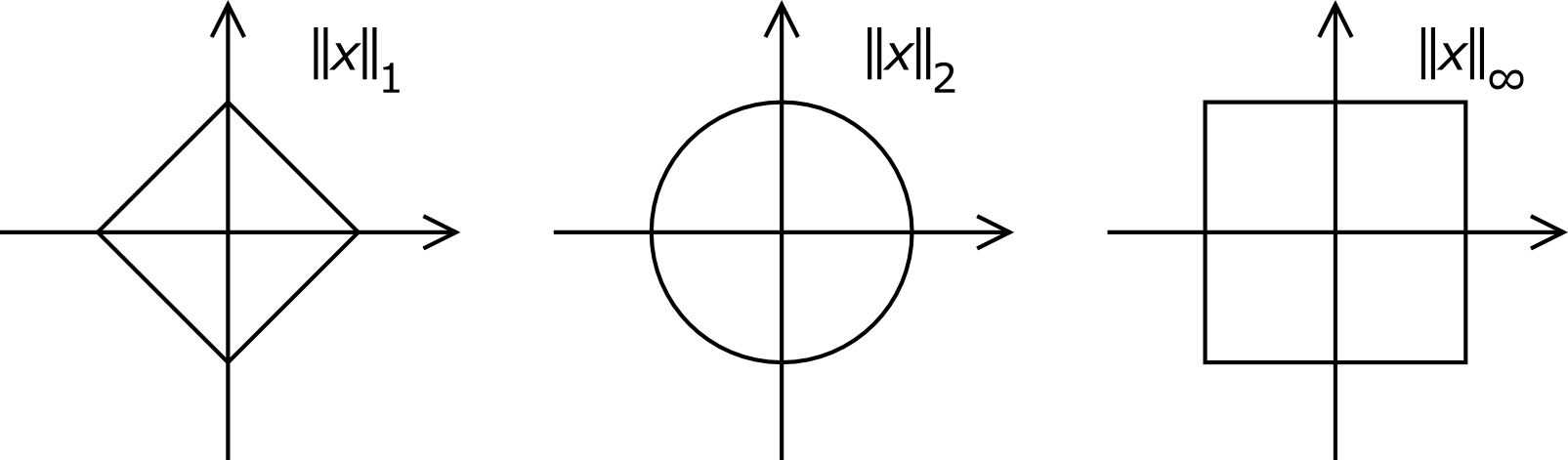

Because measuring allows to make decision, by empowering evaluation and the comparison between elements, and thus to make optimal choices and perform improvements. But measuring something is not always easy.When talking about a measure, one immediately think of a ruler or a scale, that will give you a straightforward indication on the height or the mass of an object. But this is true only for one-dimensional measurements (and when the quantity can actually be measured… what is the weight of your soul?)When it comes to multidimensional problems, it is necessary to define a metric. In 2D dimensions, a few norms attracts the most attention :

||x||_1 = |x_1|+|x_2|

||x||_2 = (|x_1|^2+|x_2|^2)^(1/2)

||x||_p = (|x_1|^p+|x_2|^p)^(1/p)

But these norms assume that all the dimensions can be treated in an equal manner. In many occasions, it is necessary to add some subjectivity. To the question “Which is the biggest between Paul (1m90, 60kg) and Joe (1m60, 90kg)?”, Body-Mass Index (BMI=weight/height²) will give you an answer : BMI(Paul) = 16.6 < BMI(Joe) = 35.1 (it also tells you that Joe is obese). But it is easy Even though BMI is ubiquitous in health survey, and seems to work relatively well, it is highly subjective. Quetelet (the guy wo invented it) probably found that BMI was equivalent to the mass density of somebody (if you consider that people are square, and that everybody has the same thickness, that make sense) if you relax unit cancellation (Munroe don’t!).

The problem of subjectivity is more acute is scientific bibliometry : to rate a scientist, you can use the total number of publication, the total number of citations or a mix thereof with advanced weighting such as impact factor. You can also create the h- or a g-index, which are more subtle ways to perform bibliometry and fight against “publication farms” (so numerous are the labs where you are required to publish 3 papers or more during your thesis, no matter how interesting the results are ! This is why there the triptytch theory/experiment/discussion is so often scattered between many articles).

Another field that require metrics and some subjectivity is the field of multidimensional optimization. For optimization, it is necessery to attribute a scalar value (in the real set) to a multidimensional object (note that the word ‘scalar’ referes to ‘scale’, and thus measure!). This miracle is achieved by defining what is called a merit, cost or objective function (even though it can be very subjective ;-) which will try to reflect all the properties of an object (or the difference between two objects) into a single value . Then, you can compare values in a totally ordered set (the complex set is not a totally ordered set, so that a complex value must be considered multi-dimensional) and optimize, using algorithm such as convex optimisation (gradient, etc.) , Nelder-Mead method, Metropolis-Hastings and genetic algorithms or adaptative learning (depending on the fuckedness of your problem).It seems to me that in general people (in physics) do not care enough about that function, which is yet essential for stability and convergence reasons. Especially when all the dimensions of the problems are incommensurable. This defect can be altered with the use of non-covariant optimization algorithm (MacKay’s ITILA, chap34, p441), which is the reason to be of algorithm such as conjugate gradient descent.Statistics is the art of measuring ill-defined concepts (what is “the age” of the population?). Statisticians created many tools for measure and aggregating data, the most used being of course the mean and the standard deviation.

They also defined a measure of the quality of the data or of the estimator (which is bound by the famous Camer-Rao Lower Bound). Correlation coefficients are useful to measure the quality of the relation between two variables, but they sometimes fail (think of two circularly correlated variables). But all these metrics are very dependant on the data set itself : you have to make numerous assumption to be able to use your tools (gaussian? stationnary? hypothesis?) and are often very subjective. But the dream to be objective of any scientist is a fallacy : this is the never ending debate between bayesian and frequentist.

When it comes to mathematics and science, it seems that there is a computability bias (I shall discuss more about this point in a future article) in the definition of metrics and method. People might use sub-optimal solution, only because they understand them. For example, if you consider a set of datasets, people will in general consider the means of the dataset to obtain an aggregated set, since it is easy manipulate and study mathematically, while many other methods would be more suitable (e.g. median filtering).Sometimes, establishing a ranking can lead to some very dishonest manipulations : as Dr Goulu analyzed in post, given a set of objects with some properties you can always get a desired ranking by finding the optimal weighting of the properties (well, not always : you have to allow the weights to be negative…)There is also a strong cognitive bias linked with measurement process and presentation thereof. We, as human, constantly perform measurement. We bear a lot of very sensitive instruments (the so-called five-senses, but there are actually many more sensors spread over our body), some of them being extremely precise (e.g. the eye; another example : entropy,the measure of disorder which is surprisingly easy to evaluate through temperature). But they have to deal with some many orders of magnitude that our brain processes the information so that we end up with relative information (see Fechner’s law).

We are also often fooled by the way things are stated (badly). For example, to the question “which of car and plane is the safest?”, you might consider the rate of death per kilometer or per travel : you won’t get the same answer, though both are correct.

Another example : the MPG illusion, pioneered by C. Sunstein, which is a relativity trick:

If you are buying or trading in a car, what mpg increases are worthwhile in terms of reduced gas consumption and carbon emissions? Certainly a car that gets 50 mpg looks great compared to one that gets 33 mpg. But many other trade-ins for small improvements didn’t seem worthwhile. Why bother trading in a 16 mpg car for a 20 mpg one? Why bother putting hybrids on huge SUVs (like the Chevy Tahoe or the Cadillac Escalade), increasing their mpg from 12 to 14? What’s the environmental payoff?

Surprisingly, however, for the same distance driven, each of the improvements listed above is equally beneficial in reducing gas use. They all save about 1 gallon over 100 miles and 100 gallons over 10,000 miles (with a little rounding). Without question, 50 mpg is the most efficient level and ideally everyone would strive for it. But, if we are simply considering changes to existing vehicles, 16 to 20 mpg can help save as much gas as 33 to 50 mpg.

This is why European l/100km is a much more efficient figure in fighting again gas emission (it is simply the inverse of the mpg : comparision can now be made serial, and not parallel). This example comes from ‘Thinking, Fast and Slow‘ by Daniel Kahneman, which is probably the best non-fiction book I’ve read this year.

Now, if you want to choose a new cell phone, how will you make your decision? Will you weight oll the specs one-by-one and come up with a decision? OK, but these specs do not include what cannot be measured (or, more precisely, specified), such as the ergonomy of the operating system or the design. It turns out that the price of things is ultimately a metric that incorporate many informations about the objects so that they can be ranked in order of performance. This aggregated information comprise the quality, the demand, the design, the production cost, etc., of the product : Price is a signal, they say. But I’m not sure, contrary to proponents of free-markets, that is all there is (what will I find in ‘How to measure anything‘ by D. W. Hubbard?)I used to be an utilitarian. The main problem with the related principles is that in your life, there is uncertainty. And thus no objective measure. That is, you cannot decide yourself : if you want to maximize the utility of a population, you must first be sure of what is useful for the individuals. And that is really, really hard.I now tend to think that the real difference left and right party lies in the way each define individual and societal utility : for the right wing, there is no “penalty” for poverty, while there is a strong one for the left wing.