There’s a lot of things happening on the front of AI for Big Science (AI for large scale facilities, such as synchrotrons.)

The recently published DOE report in AI for Science, Energy, and Security Report provides interesting insights, and a much-needed update to the AI for Science Report of 2020.Computing Facilities are upgrading to provide scientists the tools to engage with the latest advances in machine learning. I recently visited NERSC’s Perlmutter supercomputer, and it is LOADED with GPU for AI training.Meanwhile, companies with large computing capabilities are making interesting forays in using AI for science, for instance Meta, which is developing OpenCatalyst in collaboration with Carnegie-Mellon University, where the goal is to create AI models to speed up the study of catalysts, which are generally very computer-intensive (see the Berkeley Lab Materials Project.) Now the cool part is to verify these results using x-ray diffraction at a synchrotron facilities. Something a little similar happened with AlphaFold where newly derived structure may need to be tested with x-rays at the Advanced Light Source: Deep-Learning AI Program Accurately Predicts Key Rotavirus Protein Fold (ALS News)

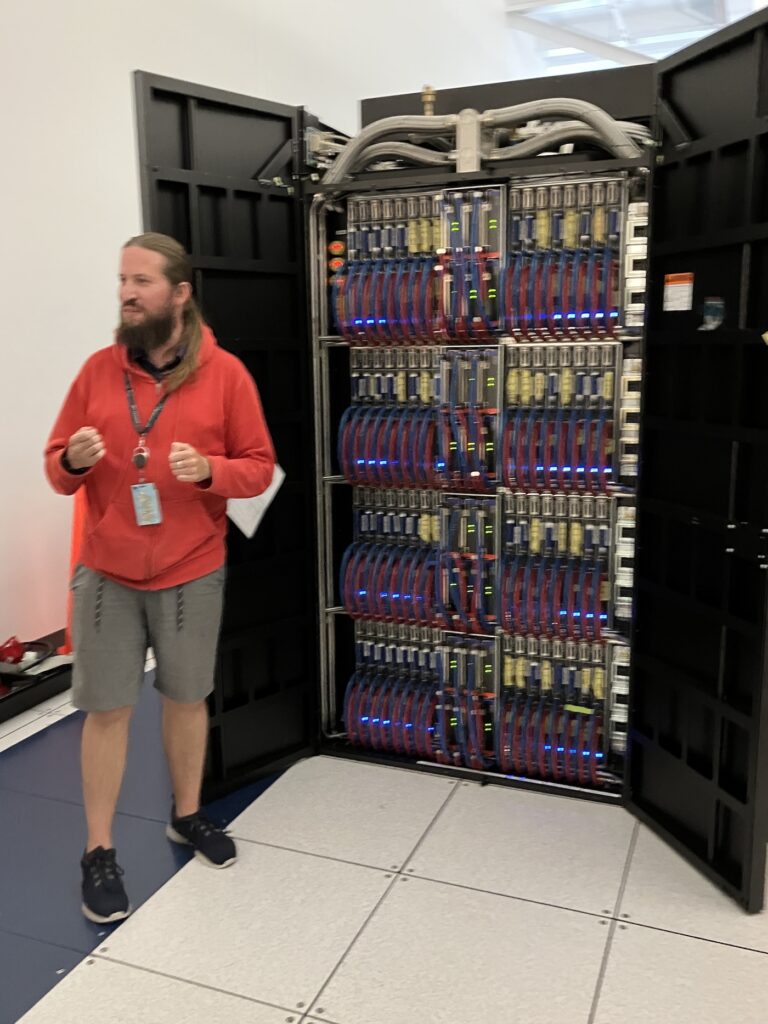

NERSC’s Cori supercomputer at Berkeley Lab (previously in the Top 5 of the TOP500 ranking of supercomputers), in August 2023 right after its decommissioning in May 2023

Aurora

Earlier this year I was lucky to visit the rooms of Aurora, an exascale computer facility currently being commissioned at Argonne National Laboratory. That is a BEAST, but contrary to NERSC’s computer, this one is part of the Leadership Computing Facility flavor of DOE supercomputers, and it is generally not open to external users.