At the moment (April 2019), the economy is in a weird quantum superposition of doom (yield curve inversion) and exaltation, with Lyft recently joining the public markets (always loved the irony of the term…)

I’ve been talking to a few people involved in driverless cars and AI lately, asking them…. when? They usually tell me soon, the problem they have is that the main drawback with learned neural networks is that they are almost impossible to debug. You can try to get insight on how they work (see the fascinating Activation Atlas), but it’s really difficult to rewire them.And it’s actually pretty easy to hack them — you can easily do some random addition to find noise that will activates the whole network. In the real world, you can put stickers at just the right location and… make every other car crash. Pretty scary…Sure, you can try to use to use Generative Adversarial Networks (GANs) and try to fight against that, but it’s not guaranteed that this will work; sensitivity might actually be baked into the system.Adversarial examples have become an emblematic failure of deep learning, exhibiting fundamental shortcomings of today’s machine learning. How can we avoid them?

— Sebastien Bubeck (@SebastienBubeck) May 29, 2018

With @ecprice and @ilyaraz2 we suggest that they might in fact be computationally unavoidable: https://t.co/Ki2UEEUNap

Another fun part is when you start messing with AI and finance: by adding some tiny signal you could crash a pricing algorithm. The industry probably have high quality standards, but we’ve already seen quite a bunch of flash crash. I don’t know if there’s any forensic available…

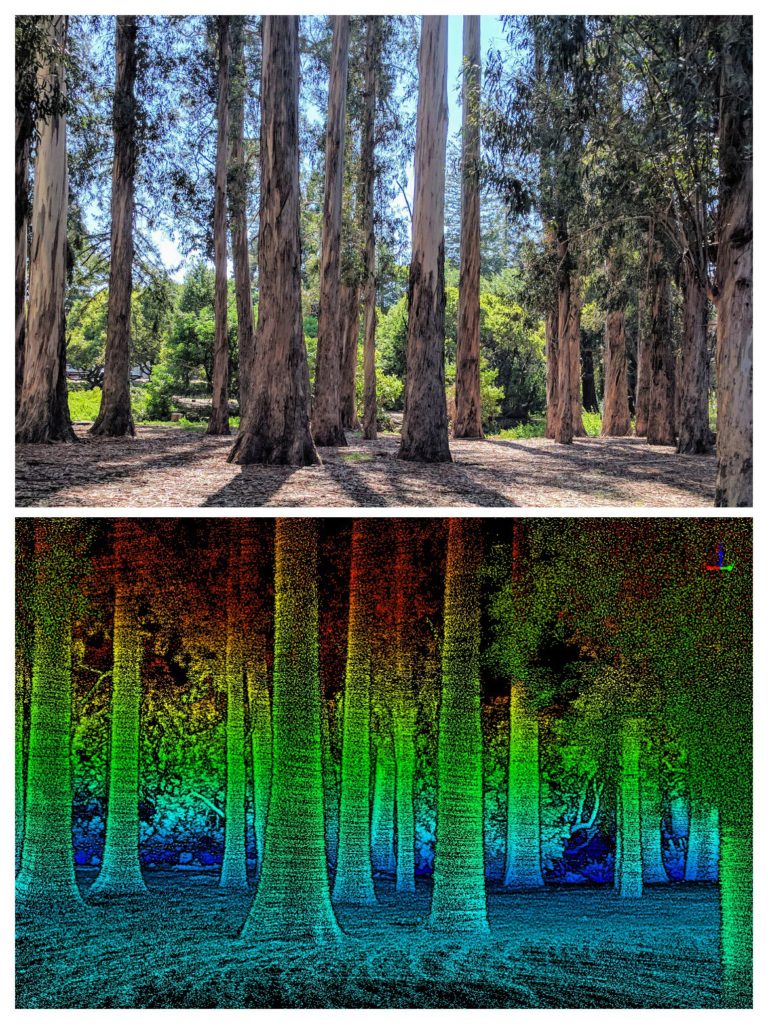

The numbers might be a little stale now (and the link broken), but it seems that the total number of miles driven is actually abysmally small — 10 millions miles is basically what 500 cars would cover over their lifetime (200,000 miles, that’s 128 days at 65mph), or about how many miles are driven on the Bay Bridge daily (260,000 cars over 4.46 miles.) Given the number of deadly accident we’ve seen, we’re still far from a system that does work (Teslas have driven over 1 billion miles and killed 3 — better than the 30,000 fatalities for 3 trillion miles driven, but not by much)There’s also a huge problem related to liability: when algorithms make mistake, who is responsible: the car manufacturer, the car insurance, the… non-pilot? It’s a very hard problem to solve in a country where lawsuits are as common as orange poppies in California.

Now if you’re talking about driverless trucks… the situation is not inherently better, as pointed out by Alexis Madrigal in his podcast Containers: most of the work for truck drivers involves the logistics and the last mile, which are very difficult to automate since they obey to twisted logic.At heart of the problem is this sentiment that some in the tech industry that, because they do have tools which can scale incredibly fast, they can solve problems other couldn’t. While in some case this is true (say achieve greater precision through fine calibration and automation), they often overlook all the possible consequence of their action, and while engineers do follow codes of conduct and scientists ethics rules, software “engineers” and computer “scientists” don’t (Ian Bogost outlines how that’s problematic in his article Programmers: Stop Calling Yourselves Engineers.)One of the driving motivation behind this behavior is the desire to alleviate all the networks created by society as a whole and that make things work, with this idea of individual changemakers, a useful myth but a myth nonetheless.And oftentimes, after they have tried, they realize that… it’s hard to do better than what’s there already…Nothing can be so amusingly arrogant as a young man who has just discovered an old idea and thinks it is his own.

– Sidney J. Harris

The truth is that self-driving cars fare better when they have a rail. I know, it’s called a train (or a tram), it sounds less sexy but it can do a lot…