Last week, my partner and I got married, and we both decided to take the same surname.

I will now be known as Antoine İşlegen-Wojdyla – edit your contact info!

Golden Gate Bridge (credit: Jake Ricker)

Last week, my partner and I got married, and we both decided to take the same surname.

I will now be known as Antoine İşlegen-Wojdyla – edit your contact info!

Golden Gate Bridge (credit: Jake Ricker)

It’s been a few months since the ChatGPT craze started, and we’re finally seeing some interesting courses and guidelines, particularly for coding, where I found the whole thing quite impressive.

Ad hoc use of LLaMa

Here’s a few that can be of interest, potentially growing over time (this is mostly a notes to self.)

Plus – things are getting really crazy: Large language models encode clinical knowledge (Nature, Google Research.)

I leave Twitter for a few month, and the science world is all upside down!

The superconductivity community was simmering, with the news that a new compound name LK-99 may be superconducting at room temperature. Eventually, things quenched abruptly, but not without an interesting foray on how science works nowadays, some good takes and a decent media coverage.I first learn about it when I read an article in Ars Technica “What’s going on with the reports of a room-temperature superconductor?” where I saw the name of my friend Sinead popping up. She was in the spotlight because she had run some very complicated simulations to determine whether LK-99 could be a candidate for superconductivity, and found that the material has indeed some interesting features – volume collapse and flat bands – the latter being a common feature of superconductors.Alas, it seems that the results from the initial paper failed to be reproduced by other teams, who in passing found some interesting properties for this class of material. Inna Vishik, who was running the ALS UEC Seminar Series: Science Enabled by ALS-U with me, summarized it well:Now that I have a captive audience…(welcome new followers!) a monster thread on what my paper says, the approximations and the caveats… (1/aleph) https://t.co/twSIsn1Ho9

— Sinéad Griffin (@sineatrix) August 2, 2023

“The detective work that wraps up all of the pieces of the original observation — I think that’s really fantastic,” she says. “And it’s relatively rare.”

LK-99 isn’t a superconductor — how science sleuths solved the mystery – Nature

There’s a lot of things happening on the front of AI for Big Science (AI for large scale facilities, such as synchrotrons.)

The recently published DOE report in AI for Science, Energy, and Security Report provides interesting insights, and a much-needed update to the AI for Science Report of 2020.Computing Facilities are upgrading to provide scientists the tools to engage with the latest advances in machine learning. I recently visited NERSC’s Perlmutter supercomputer, and it is LOADED with GPU for AI training.Meanwhile, companies with large computing capabilities are making interesting forays in using AI for science, for instance Meta, which is developing OpenCatalyst in collaboration with Carnegie-Mellon University, where the goal is to create AI models to speed up the study of catalysts, which are generally very computer-intensive (see the Berkeley Lab Materials Project.) Now the cool part is to verify these results using x-ray diffraction at a synchrotron facilities. Something a little similar happened with AlphaFold where newly derived structure may need to be tested with x-rays at the Advanced Light Source: Deep-Learning AI Program Accurately Predicts Key Rotavirus Protein Fold (ALS News)

Continue readingThings are moving in terms of Open Data! The Department of Energy has just released an update to it Public Access Plan (initially published in 2014), and embracing the use of persistent identifiers for papers and data, to promote the FAIR principles (Findability, Accessibility, Interoperability, and Reusability of data and metadata.)

And let me insist on the last bit:

Data without metadata is mostly useless

At the time where Twitter was a nice place to share thoughts and disseminate bite-sized knowledge, I thought the Twitter posts/URL were something akin to Digital Object Identifiers – you could post an image with caption, and share the link on your blog or with anyone (now Twitter doesn’t allow to share those so easily.) Zenodo allows you to creat actual DOI for your data (data will include your ORCID and metadata.), albeit not as user-friendly – and to some extent, github works the same way (the visualization and graphical content is not the best)

At Berkeley Lab, the Office of Research Compliance has updated its guideline, providing excellent resources to build a Data Management Plan.Last week I was lucky to meet with Vanessa Chan, the Chief Commercialization Officer for the Department of Energy and Director of the Office of Technology Transitions. She wanted to hear what kind of hurdles when it comes to start a company (hint: a lot.) I told her that a major, overlooked issue is that you generally to be a permanent resident to start at company in the US, whereas two-thirds of postdocs are foreign nationals and on visas. There are ways to get around the requirement (such as Unshackled), but it’s a little sad not more is done to provide support to those willing and able (plus – it is a well-known trope that many US companies are founded by foreign nationals, what I tend to believe is among what sets California apart from other states and other countries, where entrepreneurship doesn’t flourish as much as expected despite many efforts)

Making a presentation to an audience is important to get your ideas through, and while communication is a basic human trait, communicating effectively requires some thoughts (TED talk speakers go through a thorough training to get their point across.)

Here are a few things learned, for the use of postdocs where one can easily drown everyone else. These are based on my experience, but there are many resources around the web to draw from.

(These are stretch goals; I rarely follow these rules myself, but they are useful if you don’t know where to start.)

Use 16:9 format (these days most presentations are online, and most screen are wide)Start with an outline.Often, using the title to summarize the main point of the slide is a good use of the title.No text should be smaller than 18pts.Use animations sparingly, to expose your thoughts point by point. Avoid fancy animation or transition between slidesNo more than one slide per minute (if there’s more, you can probably merge a few points) Continue readingI am a native French speaker, and I have always been confused by the ubiquity of English, language which is actually quite difficult to speak (why is tough, though, thought and enough so different?) And I was also puzzled the difference between liberty and freedom – no one could ever explain me the difference, even though “Freedom” is probable the most overused concept in American society (French has “Liberté” in its national motto, but is has nothing to do with “free” as in “free sample.”)

Finally, I found an interesting explanation by Jorge Luis Borges, who sees this as a feature, not a bug:I really enjoy this notion of physicality – onomatopoeia are a vibrant part of the language: whisper, gulp, slam, rumble, slushy, etc.I have done most of my reading in English. I find English a far finer language than Spanish.

Firstly, English is both a Germanic and a Latin language. Those two registers—for any idea you take, you have two words. Those words will not mean exactly the same. For example if I say “regal” that is not exactly the same thing as saying “kingly.” Or if I say “fraternal” that is not the same as saying “brotherly.” Or “dark” and “obscure.” Those words are different. It would make all the difference—speaking for example—the Holy Spirit, it would make all the difference in the world in a poem if I wrote about the Holy Spirit or I wrote the Holy Ghost, since “ghost” is a fine, dark Saxon word, but “spirit” is a light Latin word. Then there is another reason.

The reason is that I think that, of all languages, English is the most physical of all languages. You can, for example, say “He loomed over.” You can’t very well say that in Spanish.

And then you have, in English, you can do almost anything with verbs and prepositions. For example, to “laugh off,” to “dream away.” Those things can’t be said in Spanish. To “live down” something, to “live up to” something—you can’t say those things in Spanish. They can’t be said. Or really in any Roman language.

Lately, I’ve been very much into Turkish all things for some reason. It turns out there’s a lot of great music emanating from this country, at the intersection of continents, from artist there and abroad

Anadol is an artist based in Berlin, somewhere between dreampop and ambient music – so good!

“I suppose in about fortnight we shall be told that he has been seen in San Francisco. It is an odd thing, but everyone who disappears is said to be seen at San Francisco. It must be a delightful city, and possess all the attractions of the next world.”

― Oscar Wilde, The Picture of Dorian Gray.

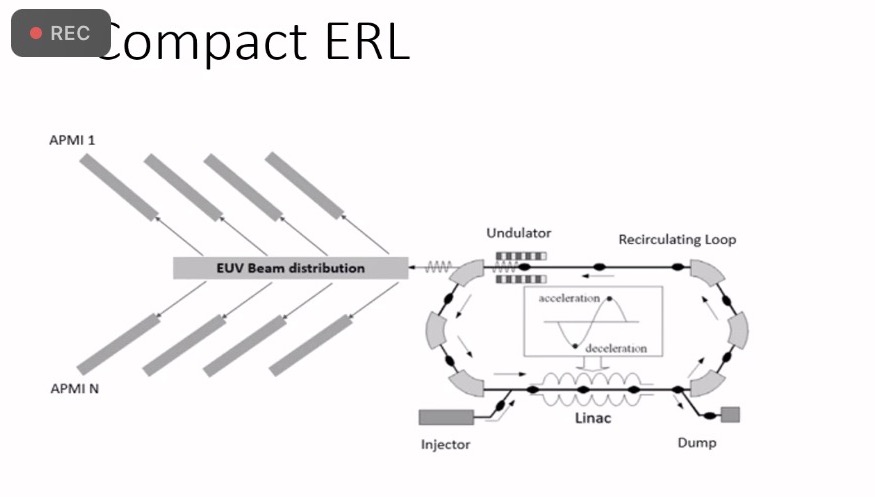

Lately, many of my colleagues have been leaving DOE light sources (Berkeley Lab and SLAC National Lab) to work for startup companies, and it seems that they all try to build better sources (probably based on Free Electron Lasers.)

Such companies are Tau Systems, xLight and a third one which seems to be stealth but looks light a giant black hole, given the pool of talent it managed to attract.Good EUV sources have always been a problem, and the current solution using laser pulsed plasma, blasting 40kW of CO2 laser power onto 100um tin beads at khz rates to generate ~200W of EUV is totally crazy, but it works. Still, there must be better ways to do it, and given the unit cost of a EUV litho scanner ($200M), improving the uptime and productivity even by a few percent would be extremely valuable…I’m wishing good luck to my colleagues going his route, this is quite exciting!

Such companies are Tau Systems, xLight and a third one which seems to be stealth but looks light a giant black hole, given the pool of talent it managed to attract.Good EUV sources have always been a problem, and the current solution using laser pulsed plasma, blasting 40kW of CO2 laser power onto 100um tin beads at khz rates to generate ~200W of EUV is totally crazy, but it works. Still, there must be better ways to do it, and given the unit cost of a EUV litho scanner ($200M), improving the uptime and productivity even by a few percent would be extremely valuable…I’m wishing good luck to my colleagues going his route, this is quite exciting!